Quick Overview: This blog explores the intricacies of handling errors and downtime in real-time data streaming with Node.js, emphasizing its non-blocking, event-driven architecture for efficient data processing. It delves into unique aspects such as stream error propagation and the importance of implementing automated reconnection mechanisms and managing backpressure. It highlights why Node.js is a preferred choice over other programming languages for data streaming.

In the fast-paced digital world, real-time data streaming has become a cornerstone for modern applications, enabling seamless, instantaneous communication and data transfer. Node.js, with its non-blocking I/O model, Node.js stands out as a prime candidate for building efficient, scalable, real-time data streaming applications. However, navigating through errors and downtime is crucial for maintaining the reliability and performance of these applications. Unlock the full potential of real-time data streaming in your projects, hire Node.js developers today.

Error Handling in Real-Time Data Streaming

One of the most challenging aspects of real-time data streaming is managing errors gracefully. In Node.js, streams are designed to handle data in chunks, making them susceptible to errors at any point in the data flow. From network issues to file system errors, the unpredictable nature of streaming data requires robust error-handling mechanisms.

Handling Errors in Node.js Streams

Errors in Node.js streams can occur for various reasons, such as network problems, file access issues, or invalid data. Effective error handling ensures that these issues do not crash the application and that appropriate measures are taken to address them.

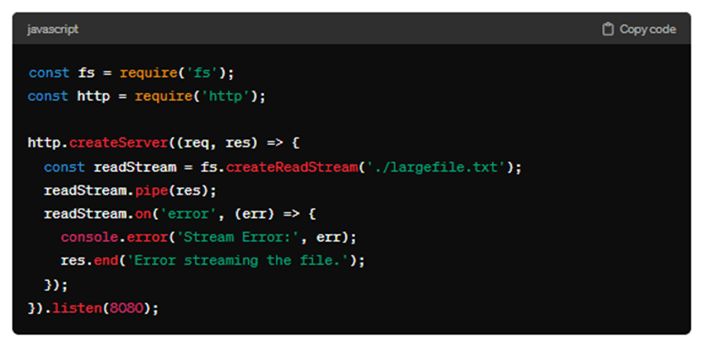

1. Use Case: File Streaming

Consider an application that streams large files from disk to clients over the network. Using Node.js, you can create a readable stream from a file and pipe it to the response object of an HTTP server request.

In this example, an error event listener is attached to the readStream. If an error occurs (e.g., the file does not exist), the error is logged, and the client is informed of the issue, preventing the server from crashing and providing feedback to the user. know which are the Best Node.js Frameworks for App Development in 2024.

- Process Insight: Stream Error Propagation

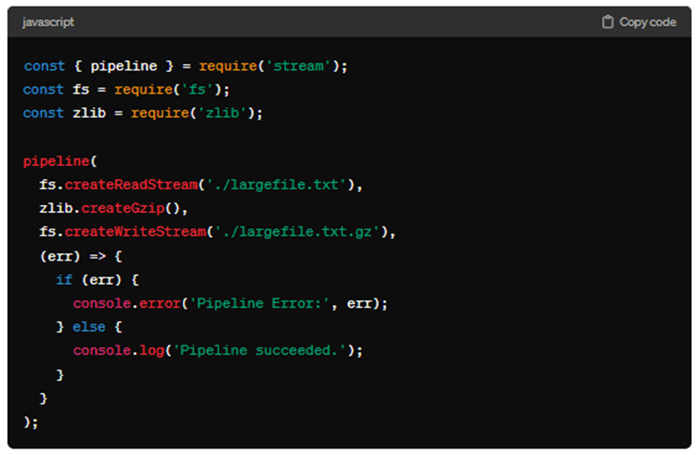

A powerful feature of Node.js streams is their ability to propagate errors through a pipeline. When using the pipeline function to chain multiple streams, errors in any stream are forwarded to the callback function, allowing centralized error handling.

In this scenario, if any part of the pipeline (reading, compressing, or writing) encounters an error, it is captured in the final callback. This method simplifies error handling in complex stream pipelines.

Managing Downtime in Real-time Streaming

Downtime, whether due to server maintenance, network issues, or other reasons, poses significant challenges in real-time data streaming. Implementing strategies to manage downtime effectively can enhance the resilience and reliability of streaming applications.

1. Use Case: Live Video Streaming

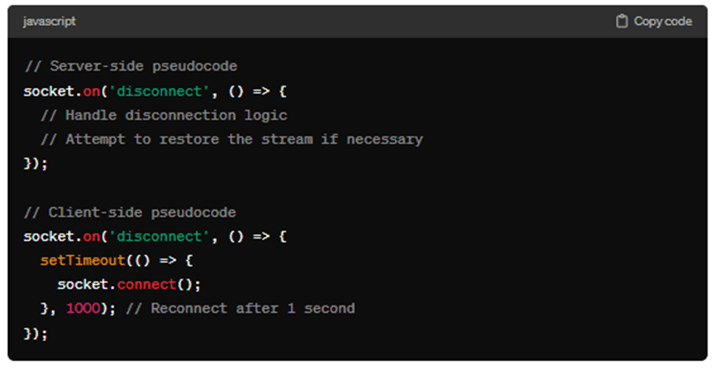

Maintaining a continuous stream is crucial for user experience in a live video streaming application. Downtime can occur due to server issues or network instability.

The application can implement a reconnection mechanism on the client side to handle this. If the stream is interrupted, the client attempts to reconnect to the server after a short delay. On the server side, Node.js can monitor client connections and manage stream restarts efficiently. Know what are the 10 must follow best practices for node.js app development.

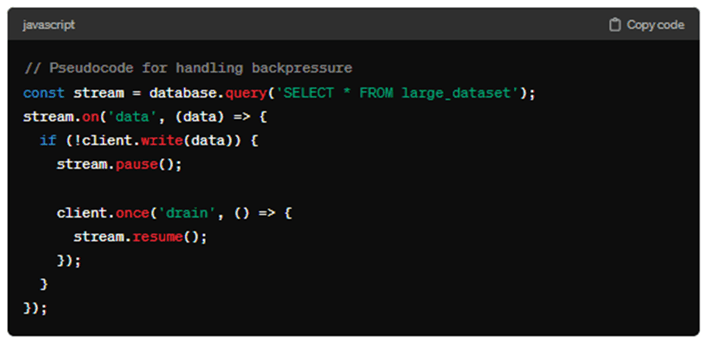

- Process Insight: Backpressure Management

Handling backpressure is vital in managing downtime and ensuring data integrity. Backpressure occurs when the data source sends data faster than the destination can handle. Node.js streams provide mechanisms to manage backpressure automatically, ensuring that data is not lost or overwhelmed during disruptions.

For example, if data is being streamed from a database to a client, if the client cannot keep up with the data rate (due to network issues or processing delays), the Node.js stream will pause reading from the database. The stream resumes once the client is ready to receive more data, ensuring no data loss or overflow. Discover the top 10 reliable Node.js development companies in 2024, ready to transform your innovative ideas into reality with cutting-edge solutions.

Unique Fact: Stream Error Propagation

A lesser-known fact about Node.js streams is their ability to propagate errors through the pipeline. When an error occurs in one stream, it can automatically be forwarded down the stream pipeline, simplifying error handling. This feature ensures that developers can manage errors centrally rather than having to handle them at each stage of the stream.

Best Practices for Using Node.js for Real-time Data Streaming

1. Using the pipeline and destroy Methods

The pipeline function in Node.js is a critical tool for managing stream errors. It simplifies stream piping and ensures errors are correctly handled, preventing memory leaks and unexpected application crashes. The destroy method can gracefully terminate a stream when an error occurs, ensuring that resources are cleaned up properly.

2. Embrace the Use of Promises and Async/Await

Promisify stream operations: Convert callback-based stream operations to promises using the util.promisify method. This enables the use of async/await for better readability and error handling in asynchronous code.

3. Optimize for Scalability and Performance

Leverage clustering: Use the Node.js cluster module to take advantage of multi-core systems, distributing the load and improving the fault tolerance of your streaming application.

Implement load balancing: Use load balancers to distribute incoming connections and requests evenly across multiple instances of your application, enhancing its scalability and reliability.

4. Prepare for Failure

Implement logging and monitoring: Use logging and monitoring tools to keep an eye on the system's health and performance. This can provide insights into potential issues before they lead to downtime.

Plan for redundancy: Design your system with redundancy in mind. Having backup systems or data replicas can ensure that your application remains operational even if part of the system fails.

Handling Downtime in Real-Time Streaming

Whether planned or unplanned, downtime is inevitable in any real-time data streaming application. The key to maintaining a reliable system lies in how downtime is managed. Node.js offers several strategies to ensure applications can recover gracefully from downtime, minimizing the impact on end-users.

Automated Reconnection Mechanisms

Use Case: One everyday use case in real-time data streaming is implementing automated reconnection mechanisms. Node.js applications can detect when a stream is disconnected due to network issues or server downtime and automatically attempt to reconnect. This approach ensures that data streaming resumes with minimal delay, enhancing the application's reliability.

Strategy: Using Backpressure to Manage Data Flow

Backpressure is a concept often overlooked in the context of handling downtime. In Node.js, backpressure refers to controlling the data flow to prevent overwhelming the system. During periods of downtime, especially in scenarios where data is being buffered, managing backpressure becomes critical to prevent memory overflow and ensure smooth recovery once the system is back online.

Why Use Nodejs For Data Streaming Instead Of Other Programming Languages: A Comparison Table

Node.js is often preferred for data streaming applications over other programming languages due to its unique features and advantages, especially in handling I/O-bound tasks. Here's a comparison table highlighting why Node.js is a popular choice for data streaming compared to other common programming languages like Python, Java, and Ruby.

|

Feature |

Node.js |

Python |

Java |

Ruby |

|

I/O Model |

Non-blocking and event-driven, it is ideal for data streaming as it can handle numerous connections simultaneously. |

Blocking I/O, though asynchronous I/O, is possible with frameworks like asyncio. |

Blocking I/O, but non-blocking I/O is possible with NIO (New I/O). |

Primarily blocking I/O, concurrency can be achieved using threads or event I/O like EventMachine. |

|

Performance for I/O Operations |

High performance for I/O-bound tasks due to its non-blocking nature. |

Good performance, but may require more effort to achieve non-blocking I/O. |

Strong performance, especially with large-scale, compute-intensive applications. |

Suitable for I/O-bound tasks but generally slower than Node.js due to its blocking nature. |

|

Real-time Data Processing |

Excellently suited for real-time data processing due to its event-driven model. |

Suitable with the use of specific libraries and frameworks for asynchronous operations. |

It can be configured for real-time processing but is typically more complex than Node.js. |

Capable but not as efficient as Node.js for real-time scenarios. |

|

Scalability |

Highly scalable due to its lightweight and event-driven nature, making it easy to scale horizontally. |

Scalable with the use of additional tools and libraries. |

Scalable, especially in enterprise environments, but often requires more resources. |

Scalable with additional effort and tools, but not as inherently scalable as Node.js. |

|

Community and Libraries |

Large and active community with a vast ecosystem of libraries for streaming and other tasks. |

Huge community with a wide range of libraries for various applications. |

Huge and mature ecosystem, especially for enterprise solutions. |

Large community with a rich set of gems, though not as extensive as Node.js for streaming. |

|

Ease of Development |

JavaScript for both client and server side simplifies development and reduces context switching. |

Widely known and used, but requires switching between languages for front-end and back-end. |

Requires more boilerplate and has a steeper learning curve than Node.js. |

Easy to read and write but may require more effort for real-time data streaming applications. |

|

Use Cases |

Ideal for building real-time applications like chats, online gaming, and live updates. |

General purpose: strong in web development, data analysis, and scientific computing. |

Robust in enterprise, web applications, and big data. |

Web development and small to medium web applications. |

Why Node.js Stands Out for Data Streaming:

- Non-blocking I/O Model: Node.js's non-blocking, event-driven I/O model makes it highly efficient for data streaming applications, where the ability to handle numerous simultaneous connections with minimal overhead is crucial.

- Unified JavaScript Development: Using JavaScript on both the server and client sides streamlines development workflows and reduces the cognitive load on developers, making it easier to build and maintain real-time data streaming applications.

- Vast Ecosystem: The Node.js ecosystem includes libraries and tools for easy and efficient data streaming, such as streams API, socket.io, and event-driven programming models.

- Scalability: Node.js's lightweight core and asynchronous event-driven architecture allow applications to scale horizontally quickly, accommodating more connections without significantly increasing hardware resources.

While other languages and platforms can be used for data streaming applications, Node.js offers a combination of performance, ease of use, and scalability that is particularly well-suited to the demands of real-time data streaming. This makes it an attractive choice for developers looking to build efficient, scalable streaming applications.

Why Hire Node.js Developers for Data Streaming

Node.js developers bring a wealth of experience and expertise in building efficient, scalable, real-time data streaming applications. Here are a few reasons why hiring a Node.js developer can be a game-changer for your project:

- Expertise in Asynchronous Programming: Node.js is built around asynchronous, event-driven architecture. Developers skilled in Node.js are adept at leveraging this model to build highly responsive, non-blocking applications.

- Scalability: Node.js is renowned for its scalability, making it an ideal choice for applications that require real-time data streaming. Node.js developers can architect your application to handle a high volume of data efficiently, ensuring smooth scaling as your user base grows.

- Ecosystem and Community Support: The Node.js ecosystem has libraries and tools to enhance real-time data streaming applications. Developers well-versed in Node.js can integrate these tools to add functionality, improve performance, and reduce development time.

Conclusion: The Future Is Streaming

Real-time data streaming is not just a trend; it's the future of application development. Node.js emerges as a powerful platform for building these applications, offering efficiency, scalability, and robustness. However, navigating the complexities of error handling and downtime is crucial for building reliable systems. By hiring skilled Node.js developers, businesses can unlock the full potential of real-time data streaming, paving the way for innovative, resilient applications that drive success in the digital era.

Whether aiming to revolutionize your industry or enhance your existing solutions, embracing Node.js for real-time data streaming can be your ticket to staying ahead in the competitive landscape. Consider partnering with proficient Node.js developers to embark on your journey towards building cutting-edge, real-time streaming applications that not only meet but exceed your business objectives.

Author